A version of this talk was presented as the keynote for the annual meeting of the Association of College and Research Libraries – Delaware Valley Chapter, in Philadelphia PA on November 6, 2014.

Thank you very much, and thank you especially to Terry Snyder for inviting me to speak with you all this morning. Today is a good day to talk about the Schoenberg Institute for Manuscript Studies (SIMS); after this talk I will be heading down the hall to attend the annual SIMS Advisory Board meeting, and tomorrow and Saturday I’ll be attending the 7th annual Schoenberg Symposium on Manuscripts in the Digital Age. So this is an auspicious week for all things SIMS.

The topic of this talk is the Schoenberg Institute for Manuscript Studies and how it may be considered an object lesson for libraries interested in supporting digital scholarship. Penn Libraries has invested a lot in SIMS, and while much of SIMS will be very specific to Penn, I hope our basic practices might provide food for thought for other institutions interested in supporting research and scholarship in the library.

SIMS is a research institute embedded in the Kislak Center for Special Collections, Rare Books and Manuscripts in the University of Pennsylvania Libraries. It exists through the generosity and vision of Larry Schoenberg and his wife, Barbara Brizdle, who donated their manuscript collection (numbering about 300 objects) to Penn Libraries, with the agreement that the Libraries would set up an institute to push the boundaries of manuscript studies, including but not limited to digital scholarship. (Although my job focuses on the digital, indeed that term features in my official title, I also have responsibilities for our physical manuscript collections). Penn did this, and SIMS was launched on March 1, 2013. As a research institute we develop our own projects and push our own agenda, and although many of our projects are highly collaborative we do not “serve” scholars; we are scholars.

Guided by the vision of its founder, Lawrence J. Schoenberg, the mission of SIMS at Penn is to bring manuscript culture, modern technology and people together to bring access to and understanding of our intellectual heritage locally and around the world.

We advance the mission of SIMS by:

- developing our own projects,

- supporting the scholarly work of others both at Penn and elsewhere, and

- collaborating with and contributing to other manuscript-related initiatives around the world.

SIMS has 13 staff members, but it is helpful to know that of this list only two are dedicated to SIMS work full-time (Lynn Ransom, Curator, SIMS Programs and Jeff Chiu, Programmer Analyst for the Schoenberg Database of Manuscripts). Everyone else on staff is either part time (the SIMS Graduate Fellows) or has responsibilities in other areas of the libraries, and beyond. Mitch Fraas, for example, is co-director of the Penn Digital Humanities Forum, a hub for digital humanities at Penn hosted through the School of Arts and Sciences.

Over the last couple of weeks, as I have been considering what I might say to you all this morning, I have also been spending a lot of time working on the Medieval Electronic Scholarly Alliance, a federation of digital medieval collections and projects that I co-direct with Tim Stinson, a professor of English at North Carolina State University. MESA is essentially a cross-search for many and varied digital collections, enabling one (for example) to search for a term – we have a fuzzy search that will include variant spellings in a search – and then one can facet the results by format (for example illustrations, or physical objects), discipline, or genre. One can also federate by “resource”, searching only those items that belong to particular collections

The work that I’ve been doing for MESA over the past two weeks involves taking data provided to us and converting it from whatever format we get, into the Collex RDF XML format required by MESA. In some cases, this is relatively easy. The Walters Art Museum, for example, through its Digital Walters site, provides high-resolution images of their digitized manuscripts using well-described and consistent naming conventions, and also provides TEI-XML manuscript descriptions that are also consistent as well as being incredibly robust. These files are all released under a Creative Commons Attribution-ShareAlike 3.0 Unported license, and they are easy to grab or point to once you know the organization of the site and the naming conventions.

Not all project data is so simple to access.

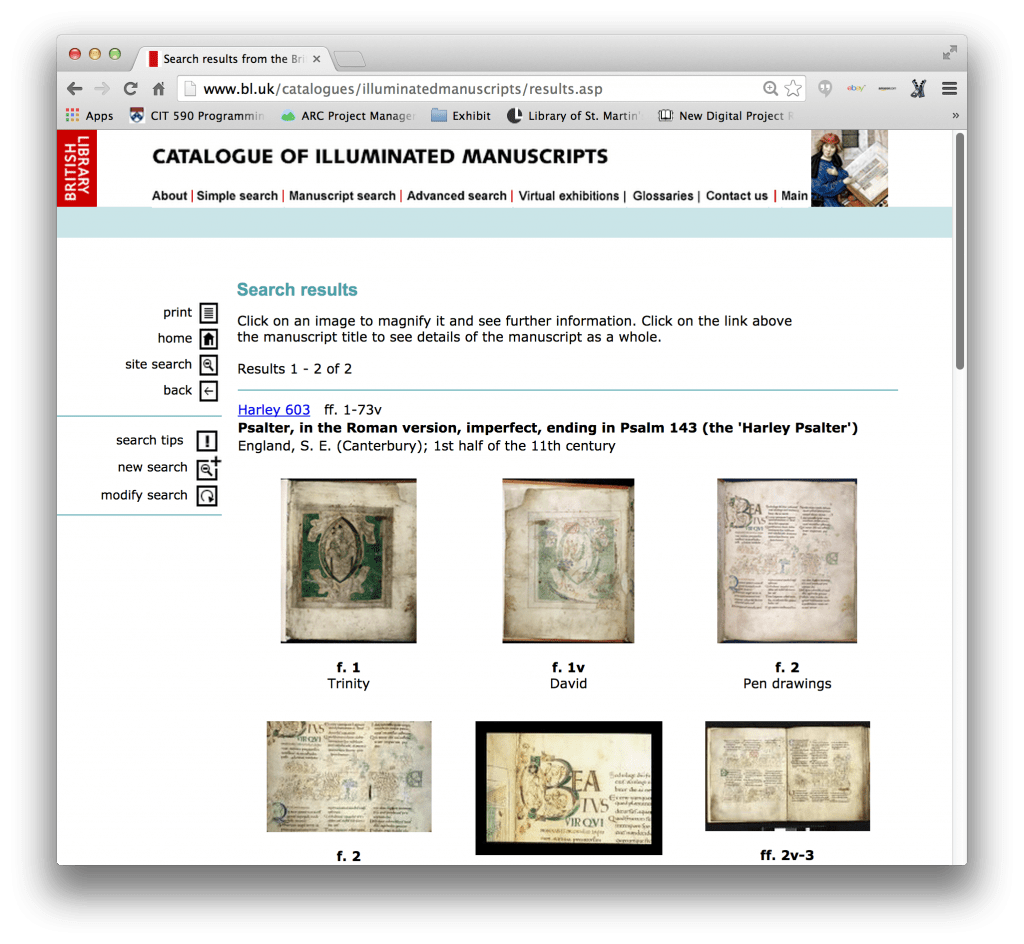

The British Library Catalogue of Illuminated Manuscripts, although the data is open access (the metadata under a creative commons license, the images are in the public domain), it is “black boxed” – trapped behind an interface. The only way to access the data is to use the search and browsing capabilities provided by the online catalog. To get the data for MESA, our contact at the BL sent me the Access database that acts as the backend for the website, and I was able to convert that to the formats I needed to be able to generate our RDF.

So what does all this have to do with SIMS? Well, as I was doing this conversion work, I had a bit of an epiphany. I realized that pretty much everything we do at SIMS can be described in terms of

THE REUSE OF DATA

And as I thought about how I might describe our various projects in terms of data reuse, I also realized that reuse of data is not new. In fact, it is ancient, and thinking in these terms puts SIMS at the tail end of a long and storied history of scholarship.

A BRIEF HISTORY OF DATA REUSE

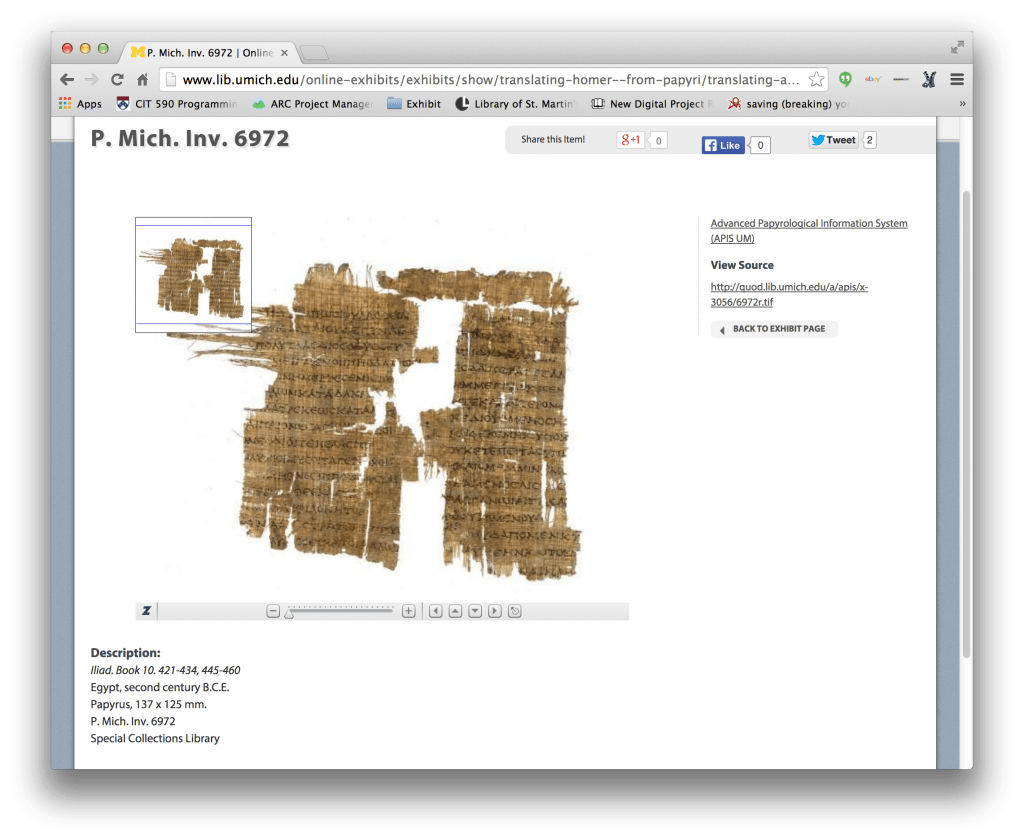

I’m not starting at the beginning, but I do want to give you a sense of what I mean when I say that data has been reused for the past couple thousand years (at least). One of my favorite early examples would have to be ancient Greek epics, such as the Iliad.

Here is a papyrus fragment, housed in the University of Michigan Libraries and dating from the second century BCE, containing lines from Book 10 of the Iliad. Thousands of similar fragments survive, containing variant lines from the poem.

And this is a page from the manuscript commonly known as Venetus A, Marciana Library 822, the earliest surviving complete copy of the Iliad, dating from the 10th century (a full 12 centuries younger than the papyrus fragment). In addition to the complete text, you can see that there are many different layers of glosses here: marginal, interlinear, intermarginal. These glosses contain variant readings of the textual lines, variants which are in many cases reflected in surviving fragments.

My next example is from a Glossed Psalter from our collection, Ms. Codex 1058, dating from around 1100. This manuscript is also glossed, but rather than variant readings, these glosses are comments from Church Fathers, pulled out of the context of sermons or letters or other texts, and placed in the margin as commentary on the psalm text.

This example is a bit later, an early 14th century Manipulus Florum, Ms. Codex 1640. Like the glossed psalter, quotes from the church fathers and other philosophers are again pulled out of context, but in this case they are grouped together under a heading – in this example, the heading is “magister”, or teacher, and presumably the quotes following describe or define “magister” in ways that are particularly relevant to the needs of the author.

Text is not the only type of data that can be reused, historically or now. We can also reuse material. Can you all see the sign of material reuse here? Check the top and bottom of the page. This is a palimpsest. What’s happened here is that a text was written on some parchment, and then someone decided that the text was no longer important. But parchment was expensive, so instead of throwing it away (or just putting it on a shelf and forgetting about it) the text was washed or scraped off the page, and new text was written over top. We can still see the remnants of the older text.

This is a page from LJS 395, a 13th century manuscript fragment that’s been repurposed to form part of the binding for a 16th century printed book. This is really typical reuse, and many fragments that survive do so because they were used in bindings.

How about this one?

This is a trick question. This is an opening from a 15th century book of hours from our collection, to compare with this.

This 17th century Breviary Collage was created by literally cutting apart a 15th century Flemish Breviary and pasting the scraps onto a square of cardboard. It is a bit horrifying, but it’s my favorite example of both reuse of material and, if not reuse of text, then reuse of illustration. Certainly the content is being reused as much as the material. Although I would never do this to a manuscript (and I hope none of you would do this either), I feel like I have a kindred spirit in the person who did this back in the 1800s, someone who saw this Breviary as a source of data to be repurposed to create something new.

I do this, only I do it with computers. Here is my collage.

Okay, it’s not a collage, it’s a visualization of the physical collation of Penn LJS 266 (La generacion de Adam) from the Schoenberg Collection of Manuscripts, just one created as part of our project to build a system for visualizing the physical aspects of books in ways that are particularly useful for manuscript scholars. Collation visualization creates a page for each quire, and a row on that page for each bifolium in the quire. On the left side of each row is a diagram of the quire, with the “active” bifolium highlighted. To the right of the diagram is an image of the bifolium laid out as it would be if you disbound the book, first with the “inside” of the bifolium facing up, then the “outside” (as though the bifolium is flipped over).

To generate a visualization in the current version of collation visualization, 0.1 (the source XSLT files for which are available via my account on GitHub), I need two things: manuscript images, and a collation formula (the collation formula describes the number of quires in a codex, how many folios in each quire, if any folios are missing, that kind of thing). To create this particular visualization, first I needed to get the images.

Our digitized manuscripts are all available through Penn in Hand, which is very handy for looking at manuscript images and reading descriptive information, but much like the British Library database we looked at earlier, it’s a black box.

It is possible to use “ctrl-click” to save images from the browser, but the file names aren’t accessible (my system reverts to “resolver.jpg” for all images saved from PiH, and it’s up to me to rename them appropriately).

The collation formula is in the description, and it’s easy enough for me to cut and paste that into the XSLT that forms the backbone of Collation 0.1.

It is actually possible to get XML from Penn in Hand, by replacing “html” in the URL with “XML”

The resulting XML is messy, but reusable – a combination of Dublin Core, MARC XML, and other various non-standard tagsets.

Because we know how important it is to have clean, accessible data (indeed my own work and other SIMS projects depend on it), we have been working for the past year on OPENN, which will publish high-resolution digital images (including master TIFF files) and TEI-encoded manuscript descriptions (generated from the Penn in Hand XML) in a Digital Walters-style website – Creative Commons licenses for the TEI, and the images will be in the public domain. OPenn is still in development, but will be launched at the end of 2014.

Having consistent data for our manuscripts in OPenn will enable me to do with our data what I already did with the Digital Walters data: programmatically generate collation visualizations for every manuscript in our collection. Because the Digital Walters data was accessible in a way that made it easy for me to reuse it, and was described and named in such a way that it was easy to figure out what images match up with which folio number, I was able to generate collation visualizations for every manuscript represented in the Digital Walters that includes a collation formula, and I was able to do it in a single afternoon. The complete set of visualizations is available here.

Version 0.2 of Collation will be based on a form (this is the current mock-up of how the form will look), instead of supplying a collation formula one would essentially build the manuscript, quire by quire, identifying missing, added, and replaced folios, and the output would be both a visualization and a formula.

Why do this? It is a new way of looking at manuscripts in a computer, completely different from the usual page-turning view, and one that focuses on the physicality of the book as opposed to its state as a text-bearing object. A new view will hopefully lead to new research questions, and new scholarship.

Moving on from Collation, the standard-bearing project for SIMS (and one that predates SIMS itself by many years) is the Schoenberg Database of Manuscripts (SDBM). This is a project that reuses data on a massive scale, and does it to great effect.

This photo is the first entry in the catalog of manuscripts at the University of Aberdeen Library, written by M. R. James. This entry, and other entries from this catalog, and from many other library and sales catalogues, have been entered into the SDBM.

Here is that same entry in the current version of the catalog. However! This year Lynn Ransom received a major grant from the NEH to convert the database to new technologies, and I’d rather show you that version.

So, here is that same entry again in the new version of the Schoenberg Database, which is currently under development. “What is the big deal?” I hear you ask. As well you may. Let me show you a different entry from that same catalogue.

You can see in this example, on the “Manuscript” line: “This is 1 of 8 records referring to SDBM_MS_5688.” The SDBM is in effect a database of provenance – it records, not where manuscripts are now but where they have been noted over time, through appearances in sales and collections catalogues. This manuscript has eight records representing catalogs dated from 1829 to 1932. This enables us to trace the movement of the manuscript during the time represented in the database.

Why create the Schoenberg Database? Although it was begun by Lawrence Schoenberg as a private database, which enabled him to track the price of manuscripts, we develop it now to support research around manuscript studies, and around trends in manuscript collecting. Study of private sales in particular could be useful in other areas of studies, such as economic history (since manuscripts are scarce, and expensive, and people will be more likely to purchase them and pay more money for them when they have money to spare).

A new project, one that we have been working on just this year, is Kalendarium. Instead of a database consisting of manuscript descriptions from catalogs, Kalendarium will be a database consisting of data from medieval calendars themselves.

This is a couple of pages of a calendar from Penn Ms. Codex 1056, a 15th century Book of Hours. Calendars, common in Books of Hours, Breviaries and Psalters, essentially list saints and other celebrations for specific days of the month. Importance may be indicated by color, as you can see here some saints names are written in gold ink while most are alternating red and blue (red and blue being equally weighed, and gold used for more important celebrations).

A major expectation of Kalendarium is that the data will be generated through crowdsourcing, that is, we’ll build a system where librarians can come and input the data for a manuscript in their collection, or scholars and students can input data for a calendar they find online, or while they are looking at a manuscript in a library. The thing is, transcribing these saints names can be difficult, even for someone trained in medieval handwriting. So, instead of transcriptions, we’ll be enabling people to match saints’ names and celebrations to an existing list. And where do we get that list?

Ask and ye shall receive. In the late 1890s, Hermann Grotefend published a book, Zeitrechnung des deutschen mittelalters und der neuzeit… (Hannover, Hahn, 1891-98.), that included a list of saints, and the dates on which those saints are venerated. And it’s on HathiTrust, so it’s digitized, so we can use it!

Well, it’s in Portable Document Format, more commonly known as PDF. Like Penn in Hand and the British Library Catalog of Illuminated Manuscripts, PDF is another kind of black box. Although it’s fine for reading, it’s not good for reuse (there are ways to extract text from PDF, although it’s usually not very pretty) Luckily, we were able to find another digital version.

Hooray!

This one’s in HTML. Not ideal, not by a long shot, but at least HTML provides some structure, and there is structure internal to the lines (you can see pipes separating dates, for example). Doug Emery, Special Collections Digital Content Programmer and the SIMS staff member responsible for Kalendarium, has been working with a collaborator in Brussels to generate a usable list from this HTML that we can incorporate into Kalendarium as the basis for our identification list.

We have a prototype site up, it’s not public and it’s only accessible on campus now. We’ve been experimenting, you can see a handful of manuscripts listed here.

Similar to Collation 0.2, in Kalendarium you’re using the system to essentially build a version of your calendar. You can identify colors, and select saints from a drop-down list. Unfortunately we have already found that many saints that are showing up in our calendars aren’t in Grotefend, or they are celebrated on dates not included in Grotefend; but this is an opportunity for us to contribute to the list in a major way.

Why do this at all? Calendars are typically used to localize individual manuscripts – if we see that particular saints are included in a calendar, we can posit that the book containing that calendar was intended to be used in the areas where those saints were venerated. However, if we scale up, we’ll be able to see larger patterns: veneration of saints over time, saints being venerated on different days in different places, and we should be able to see new groupings of books as well.

Another set of projects SIMS is involved in, the Penn Parchment Project in 2013 and the Biology of the Book Project starting in 2014, involves testing the parchment in our manuscripts – literally reusing the manuscript, extracting data from the material itself. This involves taking small, non-destructive samples to gather cells from the surface of the parchment and testing them to see what type of animal the parchment is made from. Results are interesting; as part of the Penn Parchment Project, an individual who wishes to remain anonymous made expert identification of ten manuscripts from the Penn collection, and got only five of them correct. Clearly, parchment identification could benefit from a more scientific approach. More recently we have joined Biology of the Book, a far-reaching collaboration (including folks at University of York in the UK, Manchester University, The Folger Shakespeare Library, the Walters Art Museum, Library of Congress, University of Virginia, The Getty, and others) to begin the slow process of moving forward a much larger project with the aim to perform DNA analysis on larger numbers of manuscripts. Very little is actually known about the practices surrounding medieval parchment making, including the agricultural practices that supported the vast numbers of animals that were used to create the manuscripts that survive today (and, of course, all those that don’t survive). We think of parchment as an untapped biological archive, and a database containing millions of DNA samples would enable us to discover the number of animals used to build manuscripts, where those animals were bred (and how far they were imported and exported), what breeds were used – many questions that are simply impossible to answer now.

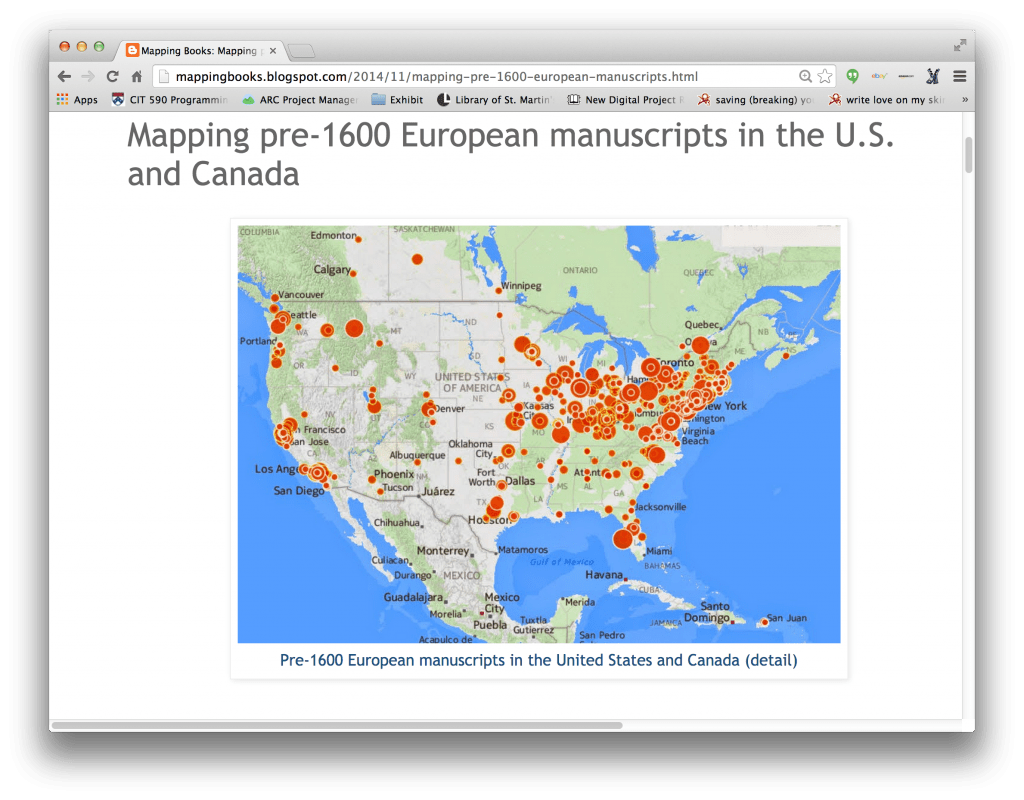

Mitch Fraas, Curator, Digital Research Services and Early Modern Manuscripts, creates maps and other visualizations relating to early books, and blogs about them at mappingbooks.blogspot.com. He’s used data from the Schoenberg Database of Manuscripts (which is available for download in comma separated format on the SDBM website, and is updated every Sunday) and data extracted from Franklin, the Penn Libraries’ catalogue, to generate some different visualizations, one of which is shown here: Charting Former Owners of Penn’s Codex Manuscripts.

The yellow dots are owners, and the larger the dot, the more manuscripts the owner is connected to (Lawrence Schoenberg and Sotheby’s are quite large, as is Bernard M. Rosenthal, a bookseller in New York). Clicking an owner shows the number of manuscripts connected to that person or institution, and clicking a manuscript shows the number of owners connected to that manuscript. This visualization was developed using data from Franklin, and the blog post linked above provides details on how it was done.

Just this week, for the 7th Annual Lawrence J. Schoenberg Symposium on Manuscript Studies in the Digital Age, Mitch has created a new map, Mapping pre-1600 European manuscripts in the U.S. and Canada, using data from the Directory of Institutions in the United States and Canada with Pre-1600 Holdings. This map shows the location of all holdings included in the directory. Larger collections have larger dots on the map. Clicking a dot will give one more information about the owner and the collection, and there are options for showing current collections or former collections, or for showing only collections with codices (full books, as opposed to fragments or single sheets).

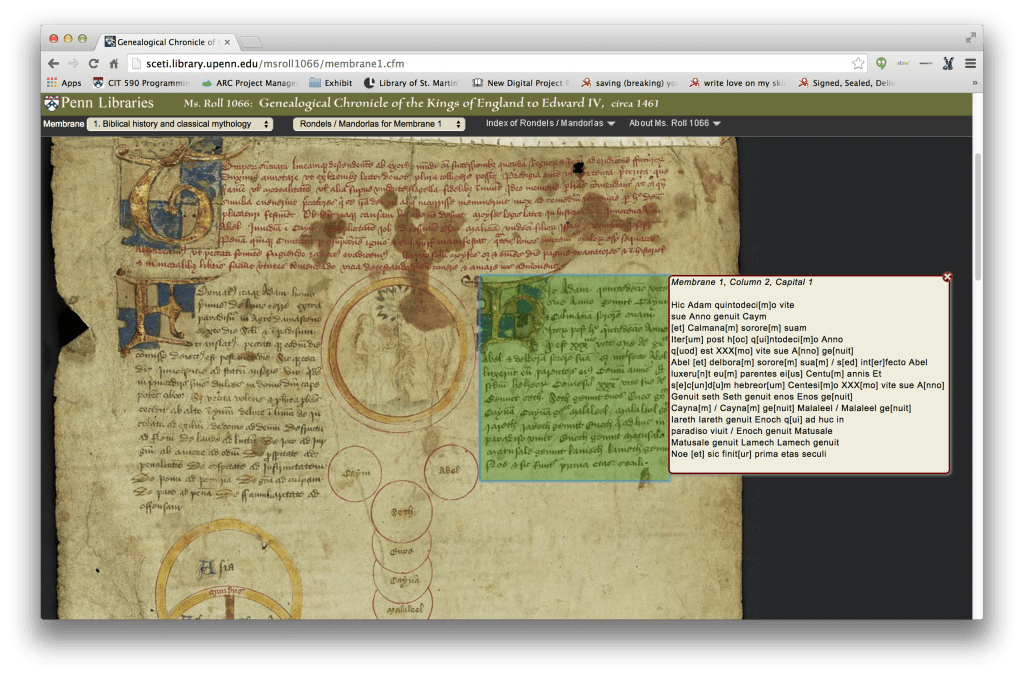

We have almost reached the end, but I would like to finish by featuring the project of last year’s SIMS Graduate Fellow, the brand new Dr. Marie Turner, which is still underway, and which is a great example of data reuse to finish on. Several years ago, Marie transcribed our Ms. Roll 1066, a 15th century genealogical roll chronicling the Kings of England from Adam to Edward IV. Her transcription was combined with images of the roll and built into a website, the screenshot here, with links between her transcription and areas on the page. But Marie’s vision is larger than this single roll. There are several other rolls of this type in existence, and her vision is to expand this single project, this silo, to not only incorporate other rolls, but to become a space for collaborative editing (transcription, description, translation, and linking) for the other rolls as well. We have successfully pulled the data from the existing site and converted it into XML, following the Text Encoding Initiative Guidelines, which we’ll use to generate the data we need to import into our new software system.

The new Rolls Project will be built in DM, formerly Digital Mappaemundi, an established tool for annotating and linking images, which has been developed by Martin Foys, a medievalist, and which has recently been brought to SIMS for hosting and continued development.

This screenshot illustrates how DM looks in terms of linking annotations to areas of an image, and you can also link areas of images together. Just last week we got a production version of DM set up on our servers at Penn, and next week we’ll be importing our data – the data we exported from the earlier edition of Ms. Roll 1066 project – into that production version. We’ll also be importing images of a half dozen other genealogical rolls. We are immensely excited to move the Rolls project to the next phase – and it was all made possible by

THE REUSE OF DATA

I’d like to close with just a few thoughts about WHAT SIMS IS – and whether or not we are an effective object lesson for libraries supporting digital scholarship is probably up for debate. We certainly do scholarship, effectively, within the context of the library, and we do it ourselves: We are scholars, not service providers. However, I think it’s important to note that our scholarship, our research, our tools and our projects are not ends unto themselves. They will all serve to support more work, to allow other scholars to ask new questions, and hopefully to help them answer those questions.

Since we are not service providers, faculty and graduate students aren’t our clients, they are our collaborators, our equals, our partners. We are in this together!

Finally, and I could have said more about this throughout my talk, we take pride in our data. We want data from all of our projects – all the data that we have reused and brought in from other places – to be consistent, with regard to formatting and documentation, accessible, in the technical sense of being easy to find, and reusable, with regard to both format (it is unlikely you will find PDFs as the sole source for any information on our site) and license. Likewise our code; we make use of Github (a site for publishing open source code) individually and through the Library’s account, and all our code is and will always be open source.

Thanks so much again, and I’m happy to take questions now.

Reblogged this on medieval bookbindings and commented:

This is an extremely useful discussion of important work going on the field of manuscript studies by Dot Porter of SIMS at Penn.